Bridging the data gap: How we are advancing Digitalcheck – driven by data

Data are an important tool for the work done by DigitalService: They help us prioritize different work steps to achieve maximum efficiency for our products and their users and ultimately to verify the effectiveness of our solutions as well. However, we have run into a challenge in one of our projects, Digitalcheck, which is aimed at digital-ready legislation: Automatically generated data such as usage figures, which we can use to measure needs and successful outcomes, are not currently available to us.

This blog post explains how we generate data despite this obstacle and use the information gleaned to advance and refine Digitalcheck.

For context, we should say that we are constantly gathering data in all our projects. When an online form is developed, for example, we analyze the average time needed to complete that form. After all, users aim to complete a form as quickly as possible. Two aspects are obvious here: the point where we can collect data, and the factor where we can have an impact. Things are different with Digitalcheck: The legislative process is a complex one that extends over a longer period, as our Digitalcheck service landscape vividly illustrates.

The policy drafters who work to develop laws within the individual ministries use Digitalcheck independently and individually as part of the drafting process. Then the German National Regulatory Control Council (NKR) (German only) takes a position on how well the draft legislation fully utilizes the available digital options. After that, the draft continues its journey to the cabinet stage and ultimately onward to the Bundestag or Bundesrat. Neither the Digitalcheck team from the Federal Ministry of the Interior and Community (BMI) and DigitalService nor the ministries involved in the inter-ministerial working group – who were also part of the launch of Digitalcheck – can directly view the individual steps along the way.

However, we need data to figure out whether and how Digitalcheck is being used and where there are deficits and psychological barriers – and also opportunities. This information is crucial in determining how much progress we have made toward the goal of Digitalcheck being used across all departments, for all new legislative projects, as early as possible during the drafting process. And, at least as importantly, where we can make adjustments to establish it even more effectively for the long term.

Generating data with help from partners and users

To gain insight and access to relevant key indicators in this situation, we need to be creative and work closely with our partners who are involved in the legislative process.

One key source is the secretariat at the NKR, which reviews Digitalcheck for individual draft laws and shares statistical data with us once the ministerial legislative process is complete.

Beyond the question of how consistently Digitalcheck is applied, regular feedback from policy drafting personnel – the key target group for Digitalcheck – is especially valuable for us. This is how we learn which problems and opportunities arise during the process. We explore this in several ways:

Regular online surveys amongst policy drafters who have applied Digitalcheck

Support inquiries we receive by e-mail or via the phone hotline

Feedback provided during our Digitalcheck workshops or Digitalcheck consultation hours

Interviews and user tests

Combining and preparing data

To arrive at a meaningful data basis, we combine the information obtained from various sources and organize it by topic. We enrich data by adding meaning, such as by linking different parameters or adding information on change over time. This transforms raw data into information we can build on.

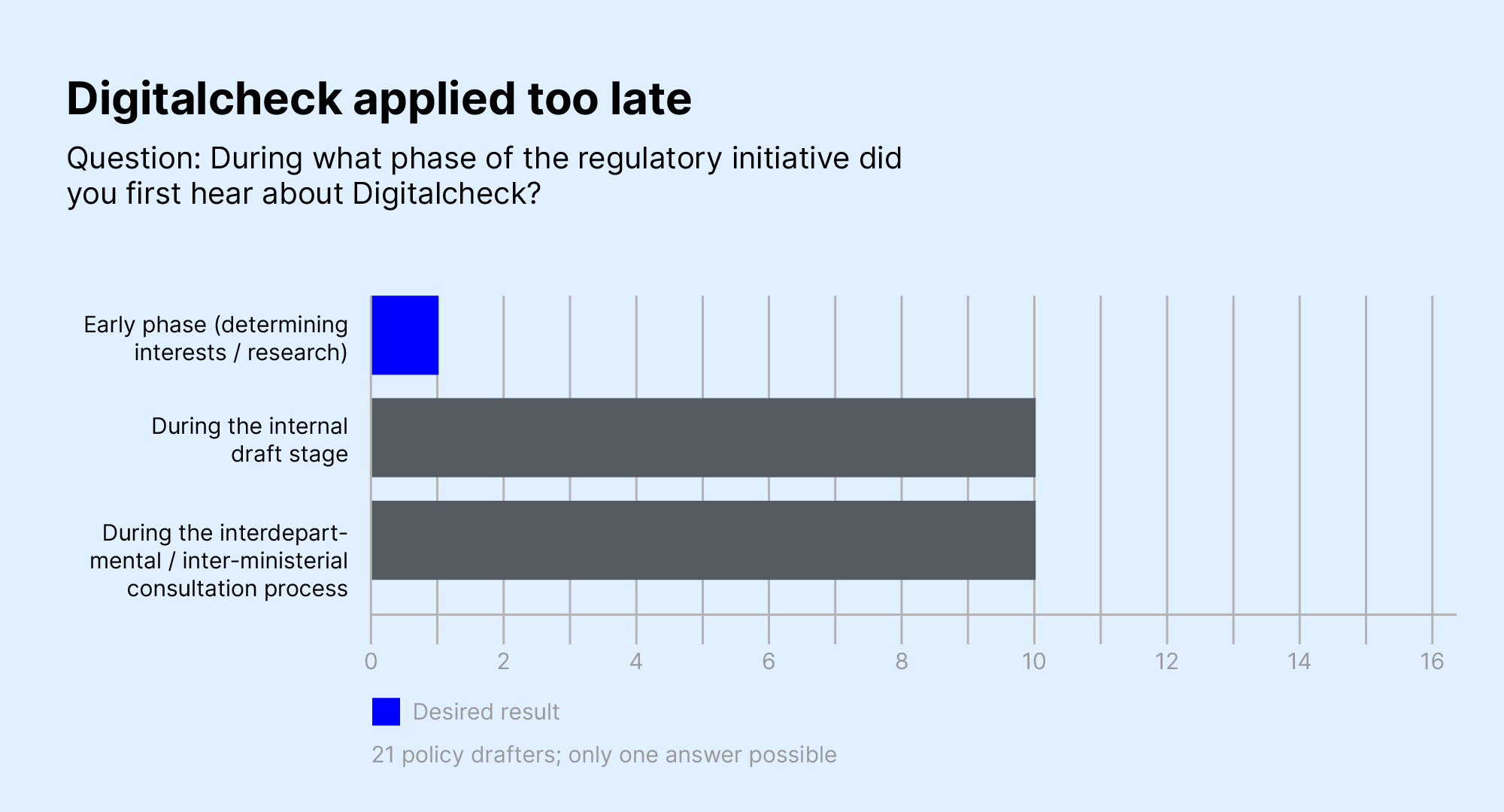

Trends emerge right away when individual survey responses are categorized by topics. For example, most policy drafters do not hear about Digitalcheck until relatively late in the drafting process, so it is difficult to impossible for it to live up to its full potential.

Joint decisions based on data

We discuss the findings we have gleaned from the various sources, often in a visualized format, in settings such as the inter-ministerial working group created specifically to develop Digitalcheck. Our latest blog post describes how we make joint decisions on the evolution of Digitalcheck there. The conclusions we can draw from existing data are very helpful in this process.

For example, the focus on visualizations as a way to trace lines of thinking and model processes originates in part from feedback received from policy drafting personnel in the Digitalcheck workshops.

As another example of how data-driven insights are folded into the development of Digitalcheck, the first online survey, conducted in early April 2023, made clear that policy drafters needed a channel to explain their reasoning.

The survey shows that most policy drafters sent in additional information by e-mail. Based on this finding, we streamlined the process and added fields for users to describe their rationale.

The online survey showed that the vast majority of policy drafters were sending further information on their policy plans to the NKR. Most did so by e-mail because the existing documentation at the time did not offer adequate ways to add further information. We changed this in the current version of Digitalcheck, adding fields for users to comment on reasons so all relevant remarks fit right into the accompanying Digitalcheck documentation for the NKR.

How insights from different sources fit together

The Digitalcheck application rate serves as a good example of how data collection, integrated views of various data sources, and shared visualization work in practice.

The average application rate now stands at 59% (as of June 2023) over the entire period since Digitalcheck was first launched, in January 2023. If we compare the application rate per month, we see a steep increase from the mere 13% of regulatory initiatives which included Digitalcheck in January. Over the transition period that had been agreed on with the NKR for the first quarter of 2023, the application rate rose to 77% just two months later, in March. The gap between the total number of regulatory initiatives and the number that involve Digitalcheck continues to shrink further (83% use rate in May).

Note:

There is a reason that the application rate did not jump to 100% right away when of Digitalcheck became mandatory. The goal of Digitalcheck is to effect sweeping change in the legislative process. That means policy drafters need to change their working processes, think about digital readiness right from the start, and expand on their methodological approaches. Like all changes, this transformation will take time, as we hear again and again during training and support sessions.

We merged this data set, which we receive from the NKR secretariat, with the results of our regular online survey of policy drafting personnel. This lets us see that when the tool was launched, early in the year, many draft law had already reached an advanced stage. In many cases consultative processes were already well under way, so engaging deeply with digital readiness at the content level was no longer possible.

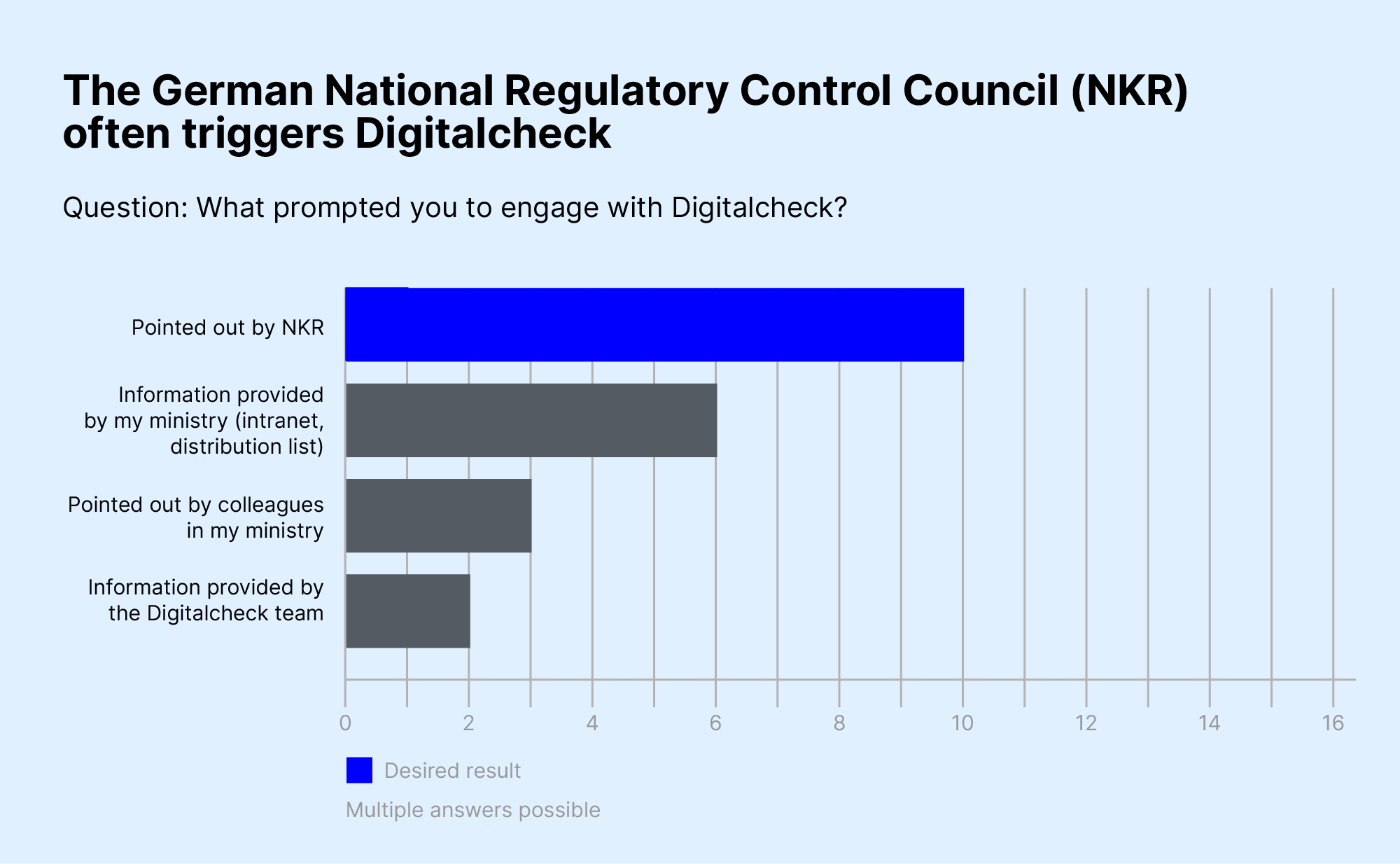

Another encouraging result: In most cases Digitalcheck is only applied relatively late, after it is pointed out by the NKR.

Policy drafters often use Digitalcheck after being alerted to it by the National Regulatory Control Council – and therefore quite late in the drafting process.

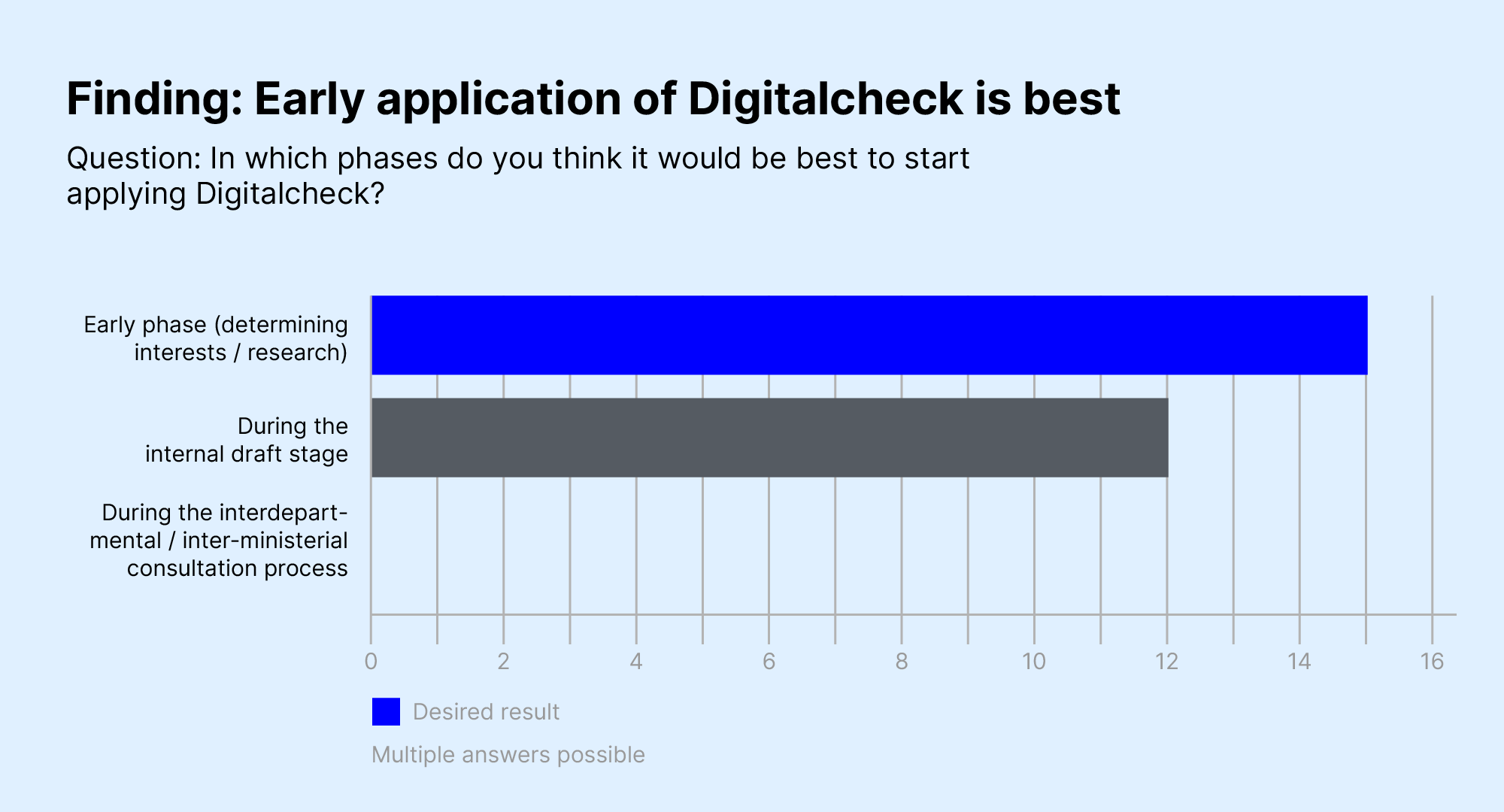

However, those drafters who have already worked with Digitalcheck confirm that digital readiness should be a key part of their thinking early on.

Survey respondents confirm that incorporating Digitalcheck at an early stage is a good idea. For us, that means we need to continue to approach them proactively and intensify support offers.

Building on this analysis, we have drawn the following conclusion and incorporated it into our further development and communication activities: People do see the value in incorporating Digitalcheck early on, although it has not yet become second nature. What that means for us is that we need to continue to approach policy drafters proactively, offering regular workshops and consultation hours, highlighting changes in our newsletter, and sharing relevant information through the inter-ministerial working group. This allows us to support the transformation that Digitalcheck necessitates for policy drafters’ day-to-day work and continue to actively promote these changes.

Overall, this example shows that it is always worthwhile to generate data and use the information derived from it as a solid basis to develop products further. The additional effort involved in using surveys or working with partners to collect data is definitely well-invested. Generating and cross-linking qualitative and quantitative data allows us to gain insights, set priorities, and sharpen our focus on user needs. Data also help to make solutions more user-centered – and to ensure they work better for everyone.

Any questions or comments about the subject of this post or Digitalcheck in general? Feel free to e-mail us at digitalcheck@digitalservice.bund.de. We’re happy to hear from you!

More information on how Digitalcheck came about and the content of Digitalcheck is available in our previous blog posts, “Establishing the foundations for a digital state through digital-ready legislation” and “Five principles for digital-ready legislation.”

Read more on the topic